Project Overview

Healthcare delivery often struggles with the same issues across places: long waits, fragmented handoffs, and uneven quality. Research on these problems is usually either richly qualitative with limited actionability, or quantitative with limited context. My project was built to bridge that gap. I combine applied anthropology with systems engineering to see both the human stories and the system structure; then I translate that view into clear, defensible choices for administrators.

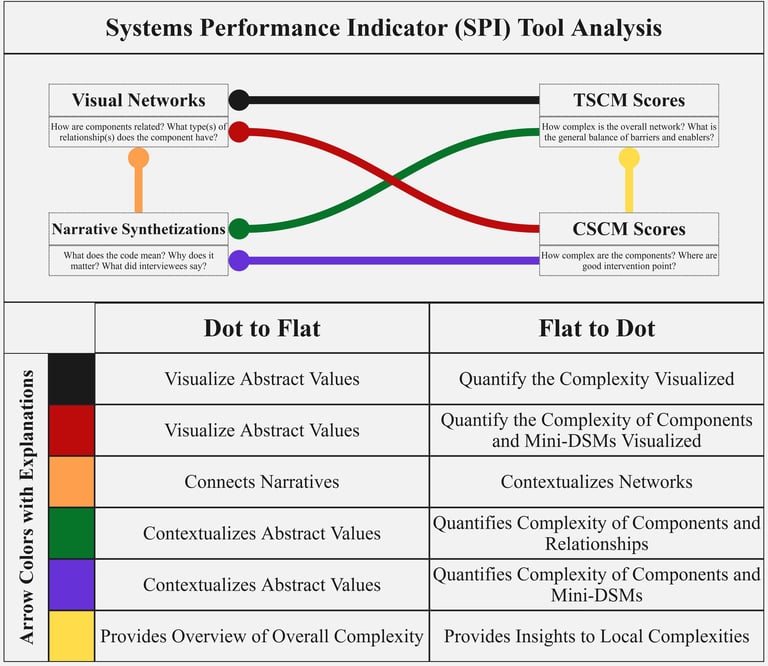

At the center is the Systems Performance Indicator (SPI). SPI takes coded interview evidence and maps it as a network of barriers and enablers; it measures how tangled parts of the system are and how easily each part can change; then it ranks where to act first. In practice this means three things: 1) a short list of high-leverage targets; 2) “bundles” of co-interventions when neighboring issues are tightly linked; and 3) a written “why this, why now” rationale that ties every recommendation back to the data.

The work was developed in the Province of Buenos Aires, Argentina across multiple communities and subgroups (geography, insurance, income). It produces administrator-ready outputs: Spanish summaries for practitioners; interactive network maps; and a repeatable analysis pipeline. Although the metrics were calibrated to healthcare, the approach is transferable to other public-good systems like social services or transportation, and even to private-sector questions such as market entry or behavior change, with modest re-tuning.

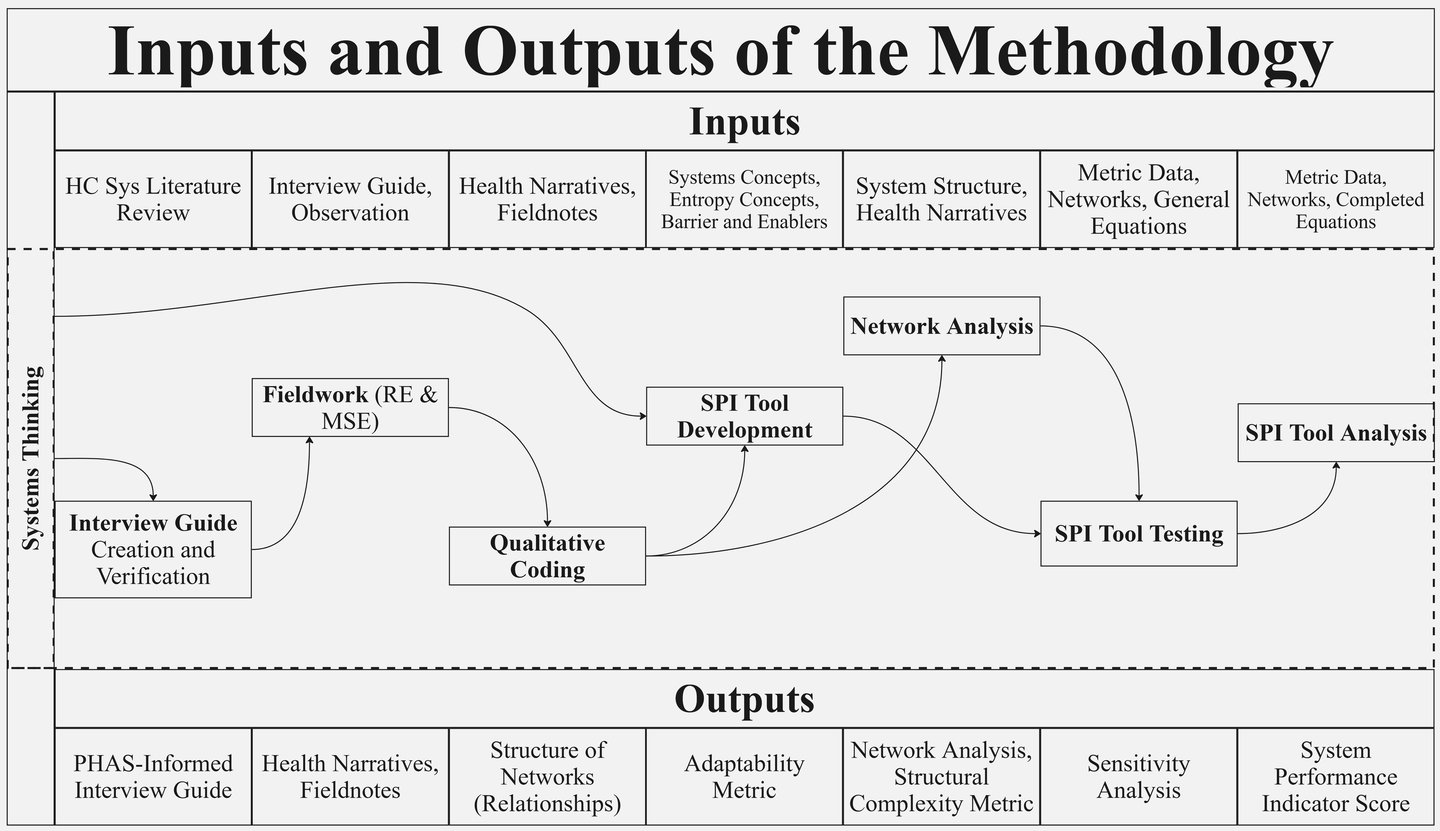

Practically, this project helps teams move from description to decision. If you need to know which bottlenecks matter most, how a fix will ripple to other parts of the system, and whether a change is feasible right now, SPI gives you an evidence-based path. The result is not just a diagnosis; it is an actionable plan that respects local context, highlights trade-offs, and makes the reasoning transparent. See the visual below that describes how the SPI Tool works.

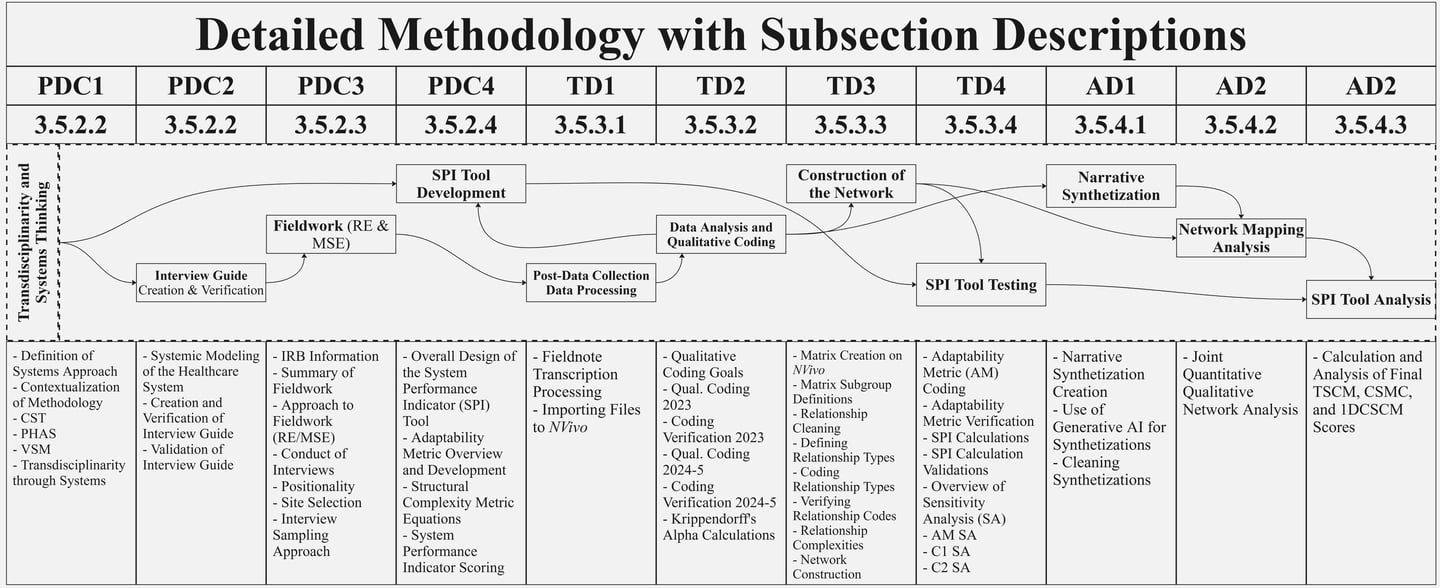

Methodology Overview

This project used a transdisciplinary systems approach that bridged applied anthropology and systems science to study healthcare delivery in the Province of Buenos Aires. In practice, the team applied rapid, multi-site ethnography to capture how patients, providers, and administrators experienced barriers and enablers in everyday care. The Purposeful Human Activity Systems lens was used to scope the problem and shape a Spanish interview guide that kept attention on people, processes, policies, and relationships. Fieldwork sites and analyses were stratified by geography, insurance type, and income so that subgroup differences were not averaged away. Participation was voluntary; narratives were anonymized and handled under an ethics protocol that prioritized participant privacy. This design allowed the work to remain grounded in lived experience while keeping a clear line of sight to system behavior and decision needs.

After fieldwork, interviews were transcribed and imported into NVivo. Codes were developed through an iterative, grounded process anchored to the research questions; intercoder reliability was checked before analysis proceeded. From the verified codes, the team built concept and network maps that made relationships among barriers and enablers explicit (for example, “A worsens B,” “C improves D”), and each tie was noted for whether it tended to help or harm. These maps were rendered for exploration and filtered to highlight stronger or more consequential links. The approach, listen, code, validate, map, turned qualitative evidence into structured representations that showed where issues clustered, how local problems propagated, and where coordinated fixes might be needed. Because subgroup cuts were preserved, the same workflow supported side-by-side comparisons across locations, insurance regimes, and income bands.

Finally, the Systems Performance Indicator (SPI) synthesized the evidence into decision outputs: a shortlist of high-leverage targets, co-intervention “bundles” when neighboring issues were tightly linked, and a “why this, why now” rationale that traced each recommendation back to its sources. The result was a reproducible analysis that balanced qualitative depth with quantitative discipline and delivered administrator-ready guidance while remaining transparent enough for other teams to rerun or adapt in new settings.